Across industries — agriculture, logistics, healthcare, and manufacturing alike — operational decisions often hinge on speed. The story below originates in livestock management, yet the underlying challenge resonates far beyond: massive volumes of unstructured communication conceal crucial signals that are too easily overlooked.

Every day, farm managers and staff exchange thousands of informal messages about feeding schedules, animal health, or incident reports. Hidden within this continuous flow are subtle warning signs that can make the difference between prevention and crisis.

For years, this unstructured communication couldn’t be systematically analyzed. The outcome was predictable: delayed reactions, inefficiencies, and missed anomalies. Japfa set out to change this by embedding artificial intelligence directly into their communication processes. We partnered with them to industrialize and scale the solution — deploying it on Snowflake to achieve enterprise-grade performance, reliability, and scalability.

As the solution architect, my goal was simple: keep the user experience natural, preserve trust in the data, and let the architecture handle the complexity. What follows is a look at how this system transforms spontaneous messages into real-time, actionable insights — and the design choices that make that possible.

Capturing and Ingesting Data

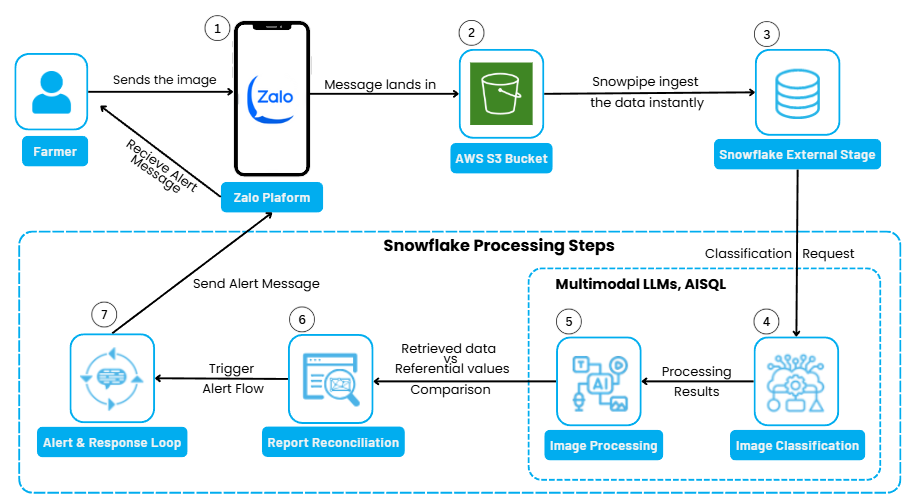

Every meaningful insight begins with a message. In this case, those messages flow through Zalo — short reports, text updates, and photos from the field. A custom-built ingestion app captures this information, transferring it to Snowflake within seconds for analysis.

Attachments, however, require a different path. Images can’t be ingested like text, so they are automatically routed to an Amazon S3 bucket, organized and labeled for easy retrieval. This ensures that both structured and unstructured data remain traceable, durable, and instantly available for downstream AI processing.

Choosing Zalo wasn’t a matter of technology preference but practicality — meeting teams where they already communicate. The framework is platform-agnostic: tomorrow, the same pipeline could seamlessly handle WhatsApp, Telegram, or Slack messages. What truly matters is ensuring that every interaction — text or image — arrives in a consistent, AI-ready format that triggers the next step in the data flow.

Processing Architecture and Flow Orchestration

Once messages enter the system, the real challenge lies in orchestrating their processing with both speed and reliability. In collaboration with Japfa’s data and operations teams, we designed an architecture rooted in three essential principles: clarity, traceability, and modular design. These same principles can apply across any high-frequency data environment — from connected farms and manufacturing lines to IoT networks and customer interaction platforms.

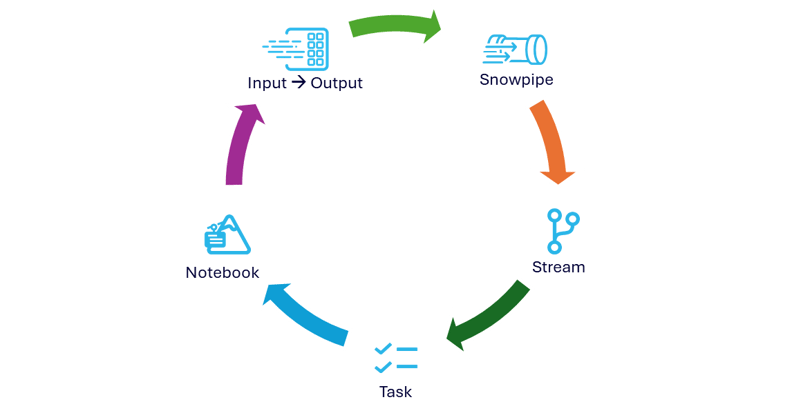

The workflow is intentionally streamlined. Each component performs a single, well-defined role: a task is linked to a specific notebook, and that notebook is triggered only when a data stream signals the arrival of new information. This event-driven approach prevents unnecessary computation and guarantees that each process runs precisely when required.

The outcome is an elegant, maintainable data flow. Every task knows its scope, every notebook executes a focused function, and each stream ensures data freshness before triggering logic. This modular orchestration enables full end-to-end visibility and makes the system adaptable to new data sources or analytical use cases without reengineering the whole pipeline.

A critical enabler of this flexibility is Snowflake’s native stream capability, which acts as a built-in Change Data Capture (CDC) layer. Instead of reprocessing entire datasets, the platform reacts only to changes — efficiently managing deltas while aligning compute costs with data volume and speed. This ensures a consistent performance curve even as workloads scale.

In practical terms, every phase — from data ingestion to classification and alerting — operates exclusively on new or updated data. The result is a pipeline that’s resource-efficient, transparent, and effortlessly scalable, ready to evolve alongside Japfa’s operational and analytical ambitions.

Intelligent Classification with AISQL

When data enters the pipeline, the first crucial task is understanding what it actually represents. Before any alerts can be generated or metrics assessed, the system must determine both the nature of the content — whether it’s plain text, an image, or a mix of both — and its business relevance: is the message about biosecurity, animal health, or flock performance?

This classification stage goes well beyond simple tagging. It incorporates a series of essential data preparation and engineering routines that make later steps seamless and reliable. Incoming messages are automatically linked with their image files stored in Amazon S3, then connected to master data such as user groups and conversation identifiers. Duplicate entries are removed, and formats are standardized so that downstream processes can interpret them uniformly.

The core of this layer runs directly within Snowflake, using AISQL. Early tests compared the AI_COMPLETE and AI_CLASSIFY functions — and while both produced comparable accuracy, AI_CLASSIFY proved notably more efficient, consuming fewer tokens and therefore reducing costs. That optimization turned out to be critical: it allows the system to process thousands of daily communications without sacrificing performance or scalability.

Once classified, each item is routed with deterministic precision through the appropriate workflow, guaranteeing that alerts, insights, and automated responses are executed within the correct operational context. The result is a system that combines AI intelligence with data discipline, delivering real-time clarity from what was once unstructured noise.

Use Case–Specific Models and Processing Logic

Once a message is classified, it enters a dedicated workflow designed specifically for its business use case. Each stream — whether biosecurity, animal health, or performance tracking — runs its own tailored logic and model configuration, optimized for the type of data it handles.

For text-based interactions, the system primarily leverages AI_COMPLETE powered by Llama-Maverick models, which provided the best trade-off between precision, scalability, and cost-efficiency. Handling images, however, required a different strategy — one that could interpret unstructured visual content and turn it into meaningful, structured data.

To achieve this, we benchmarked several complementary methods:

-

AISQL: Using prompt-based classification models such as Claude, Pixtral, Llama, and GPT.

-

OCR through Python frameworks: Including EasyOCR, Tesseract, and PaddleOCR, suitable for lightweight image-to-text extraction.

-

Document AI: A Snowflake-native feature built on Arctic-TILT, offering customizable training for specialized use cases.

The most challenging example came from the swine mortality monitoring scenario, where farmers often share photos as incident evidence. Converting these images into clean, structured data required more than simple categorization.

We tested multiple paths. AISQL, driven by flexible prompt configurations, produced strong, stable results and integrated seamlessly with the Snowflake pipeline. Traditional OCR libraries, even when enhanced with preprocessing and containerization, struggled to maintain accuracy under variable field conditions like lighting, angle, or background clutter. Document AI proved highly promising thanks to its ability to fine-tune model performance — but its limited regional availability ruled it out for production deployment.

Ultimately, the most balanced and production-ready solution came from AI_COMPLETE with OpenAI’s GPT-4.x models, which consistently delivered accurate outputs across languages, image types, and quality levels. While we also evaluated AI_EXTRACT, it could not match the precision or adaptability of the GPT-4.x approach.

This modular, use-case-specific architecture ensures that each data type — whether message, photo, or hybrid input — is processed by the most suitable intelligence layer, while Snowflake orchestrates the entire flow consistently and efficiently. The result is a scalable framework where every component remains independent yet harmonized, ready to expand to new operational domains from agriculture to manufacturing and retail.

Assessment and Alert Logic

Once the incoming data has been cleaned, structured, and enriched, the system transitions into the assessment phase — where insights are verified and contextualized. At this point, the pipeline applies different validation rules depending on the use case.

In operational contexts like livestock management, for instance, mortality reports are automatically compared with reference baselines such as flock performance metrics to detect unusual loss patterns. In other cases, like biosecurity compliance, the engine evaluates data for anomalies — for example, checking that visitor photos align with authorized personnel lists or that reported activities match approved procedures.

This layer acts as the decision engine of the entire workflow, determining when an alert should be triggered and whether an automated response is warranted. When a reply is needed, a Generative AI component formulates a context-aware message, which is seamlessly delivered back to users via Zalo, directly within the same conversation thread where the event was first reported.

By merging structured evaluation with intelligent communication, the system closes the feedback loop in real time — ensuring that alerts, insights, and recommendations reach frontline teams instantly and in context. What begins as unstructured chatter becomes a continuous, intelligent dialogue between human operators and AI, enabling faster, data-driven action exactly where it matters most.

Why This Architecture Matters

At first glance, the framework might seem straightforward — yet its implications reach far beyond agriculture. The same design pattern can transform unstructured communication, event logs, documents, or sensor data into structured, actionable intelligence in virtually any industry.

For Japfa, this translates into real-time visibility across farming operations. In other sectors, it could enable predictive maintenance in manufacturing, continuous patient monitoring in healthcare, or automated quality assurance in logistics and retail.

The architecture achieves a careful equilibrium: it remains open and extensible, able to plug into any messaging system or AI model, while still operating entirely within Snowflake’s governed environment — ensuring enterprise-grade control, scalability, and data security.

Its key strengths include:

-

Operational efficiency: Teams receive real-time alerts enriched with context, dramatically reducing reaction times in critical workflows.

-

Scalability: Designed to handle both text and images today, the modular foundation can easily expand to include video, audio, or future data types without major redesign.

-

Governance and security: Centralization within Snowflake provides a single source of truth — with full visibility into data access, lineage, and compliance.

-

Simplicity and transparency: Every process, from ingestion to alerting, is observable and auditable. This makes the system not only efficient, but also explainable and trustworthy — essential qualities for operational AI.

Ultimately, this architecture shows that applied AI doesn’t have to mean added complexity. When built on Snowflake, intelligence can be simple, traceable, and scalable by design — ready to evolve with new use cases while delivering measurable value from day one.

A Glimpse into the Future of AI in Agriculture

Having led this project with Japfa, I’ve witnessed firsthand how Snowflake’s AI-native platform, combined with SBI’s pragmatic, business-driven mindset, can turn innovation into measurable impact. Our recognition as Snowflake Partner of the Year is more than an award — it’s validation of a core belief: AI succeeds when it delivers outcomes, not just experiments.

What’s most inspiring about this initiative is how it redefines the role of AI in daily operations. It’s not about dashboards or data pipelines — it’s about seamless intelligence, embedded directly into the workflow. Farmers don’t need to understand Snowpipe, AISQL, or multimodal large language models. What they experience is faster response times, fewer losses, and greater confidence that issues will be detected before they become costly.

And this is only the start. The same architectural foundation can easily extend to video-based monitoring, predictive animal health analytics, or AI-assisted decision-making at scale — and can just as effectively power solutions in manufacturing, logistics, or healthcare. Once the framework exists, the use case becomes the variable.

This is the promise of operational AI done right: invisible technology, visible impact.

.png)